Imagine a world where you can create stunning, high-quality digital art just by describing it. Well, you no longer have to imagine because that world is here. Thanks to Stable Diffusion!

This powerful AI image generation tool combines deep learning models, diffusion processes, and stable training to produce realistic and artistic images from simple text prompts.

In this blog post, we will walk you through the fascinating world of Stable Diffusion, covering everything from understanding the technology behind it to mastering prompts, generating images, and even exploring advanced techniques for truly awe-inspiring AI-generated art.

We’ll also show you how to use Stable Diffusion effectively to enhance your creative projects.

Short Summary

- Stable Diffusion is an AI image generator for various applications, from digital art to scientific research.

- Starting with Stable Diffusion requires downloading and running the project file locally or using cloud services.

- Tips such as inputting specific prompts, customizing parameters, and post-processing images can help maximize results when using Stable Diffusion.

Stable Diffusion is an AI image generation tool that creates high-quality, realistic, and artistic images from text prompts. This groundbreaking technology leverages diffusion processes and stable training to generate images catering to various applications, from digital art and advertising to video games and scientific studies.

With its ability to create images for commercial and non-commercial purposes, Stable Diffusion is rapidly becoming one of the most popular AI image generators available today.

In AI-generated art, Stable Diffusion stands out as an open-source variant of the Latent Diffusion architecture, capable of producing images in various styles, such as anime, photorealistic, and even artistic styles inspired by famous painters.

The diffusion process involves introducing noise to an input image and gradually decreasing the noise over time, ultimately producing a final image that is both visually appealing and contextually accurate.

The Technology Behind Stable Diffusion

The technology behind Stable Diffusion consists of a deep learning model, specifically a text-to-image model that combines convolutional neural networks (CNNs) and generative adversarial networks (GANs) to generate images.

This powerful AI leverages a diffusion process that encodes text into a latent space and then generates an image from it while refining it to achieve a more realistic appearance.

Stable training is vital to producing high-quality, consistent images following text input.

Applications of Stable Diffusion

The applications of Stable Diffusion are vast and varied, ranging from digital art and advertising to e-commerce, video games, and even scientific research.

The ability to generate images in many styles, such as anime, makes Stable Diffusion a versatile and powerful tool for creators across industries.

With the right prompts and models, users can even generate realistic images of people, landscapes, animals, and fantasy worlds, showcasing the true potential of this incredible AI image generator.

Getting Started with Stable Diffusion

There are several ways to start with Stable Diffusion, including running it locally on your computer, using cloud services, or accessing it online. Each option offers advantages and limitations, which we will explore in more detail in the following subsections.

Ultimately, your chosen method will depend on your specific needs, available resources, and the level of control you desire over the image generation process.

Before diving into the various methods for running Stable Diffusion, it’s essential to download the Stable Diffusion project file from the GitHub page and extract it. This will provide you with the necessary folders and files to begin generating images using your preferred method.

Running Stable Diffusion Locally

Running Stable Diffusion locally on your computer offers greater control and customization capabilities but requires powerful hardware to ensure optimal performance. To run Stable Diffusion locally, you need a Windows 10 or 11 system with a discrete NVIDIA video card featuring at least 4GB of RAM.

Once you have the necessary hardware, navigate to the stable-diffusion-webui-master folder and execute the webui-user.bat file. This will launch the user interface, allowing you to input text prompts and generate images on your local machine.

Utilizing Cloud Services for Stable Diffusion

Utilizing cloud services for Stable Diffusion provides a more accessible option for those without powerful hardware, allowing you to benefit from faster image generation and access to powerful hardware without investing in your equipment.

However, cloud services may have limitations, such as restrictions on custom model uploads.

One example of a cloud service that facilitates the operation of Stable Diffusion is RunDiffusion, which allows you to use the Automatic1111 web UI on a powerful computer to create visually captivating images in seconds.

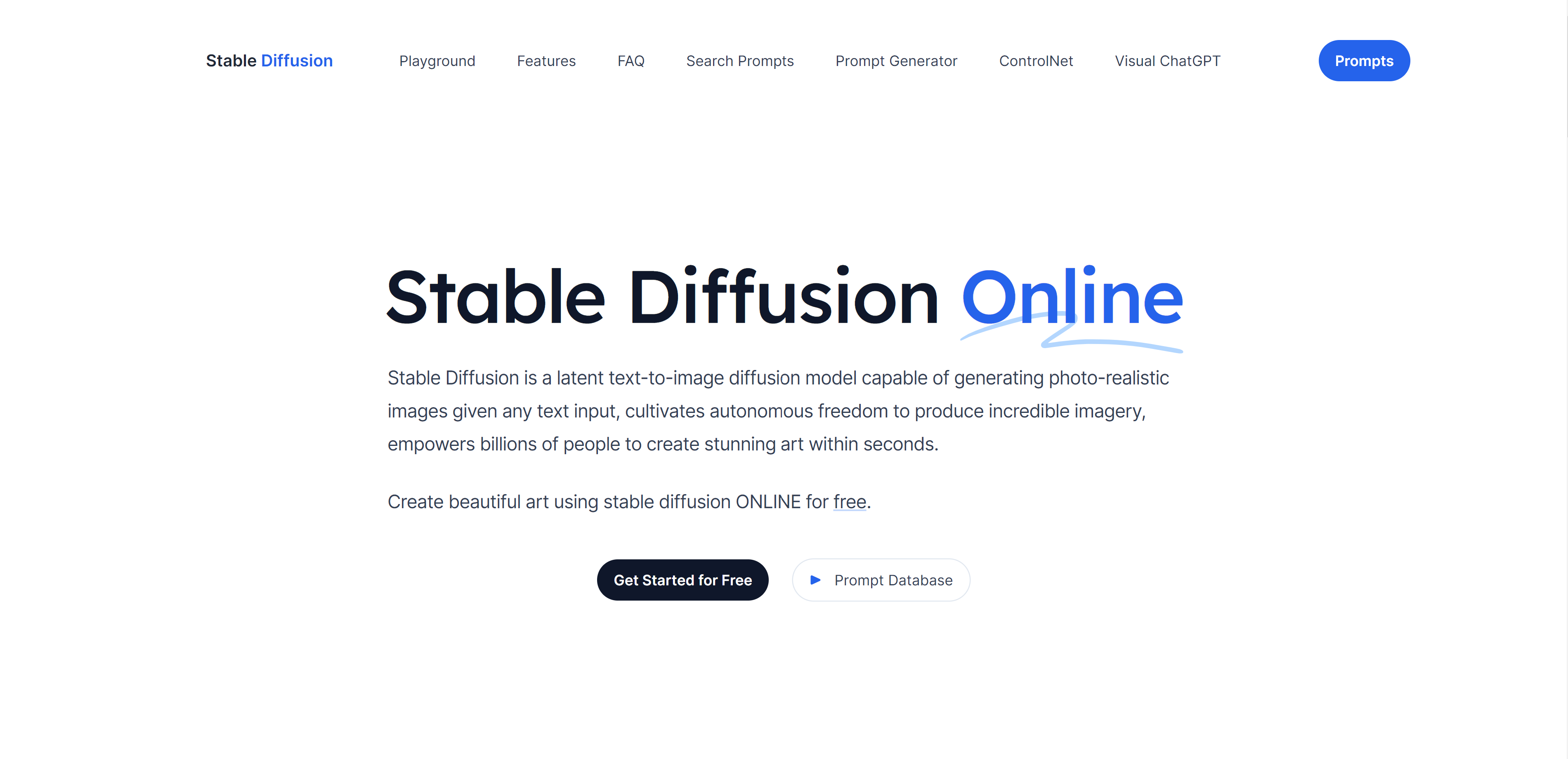

Accessing Stable Diffusion Online

Accessing Stable Diffusion through online platforms offers an easy and cost-effective way to generate AI images without powerful hardware or local installations.

Platforms like Fotor and Midjourney utilize the Stable Diffusion model to produce images based on natural language descriptions, providing a user-friendly experience for those new to AI image generation.

While these platforms may offer less customization compared to local installations or cloud services, they still provide an excellent starting point for those looking to explore the incredible capabilities of Stable Diffusion, including Stable Diffusion CheckPoints.

Mastering Prompts for Effective Image Generation

Prompt mastering is a crucial aspect of generating high-quality images using Stable Diffusion. The AI model relies on your prompts to understand the desired context and generate images accordingly.

By providing clear and precise prompts, you can significantly improve the accuracy and relevance of the generated images.

This section will discuss the importance of specificity in prompts and the use of powerful keywords to guide the image-generation process. As you input prompts in Stable Diffusion, remember that the AI model is only as good as the information you provide.

Ensuring your prompts are comprehensive and precise will produce better results and help the AI understand the context in which you want the image generated.

Additionally, incorporating pertinent keywords and phrases in your prompts will refine the generated images, ensuring they closely align with your vision.

Importance of Specificity in Prompts

Being specific in your prompts is essential for effective image generation. Vague prompts can lead to unclear or irrelevant results, whereas specific prompts provide the AI with the necessary context to generate accurate images.

For example, a prompt like “a flower” is vague and could result in any number of flower images, whereas a prompt like “a red rose in a vase” is specific and provides the AI with a much clearer understanding of the desired image.

Using the same prompt multiple times can also lead to varied results, showcasing the importance of specificity in image generation. Ultimately, the more specific and detailed your prompts are, the better the AI-generated image will align with your vision.

Using Powerful Keywords

Powerful keywords are crucial in guiding the image generation process in Stable Diffusion. These keywords help the AI model understand the desired style, object, or context of the image and can be learned through prompt-building resources and tools like ChatGPT.

As you become more familiar with the AI image generation process, you’ll develop an understanding of which keywords produce the most effective results.

Incorporating these powerful keywords into your prompts will ensure that your generated images closely align with your intended vision.

Hands-on Guide to Generating Images with Stable Diffusion

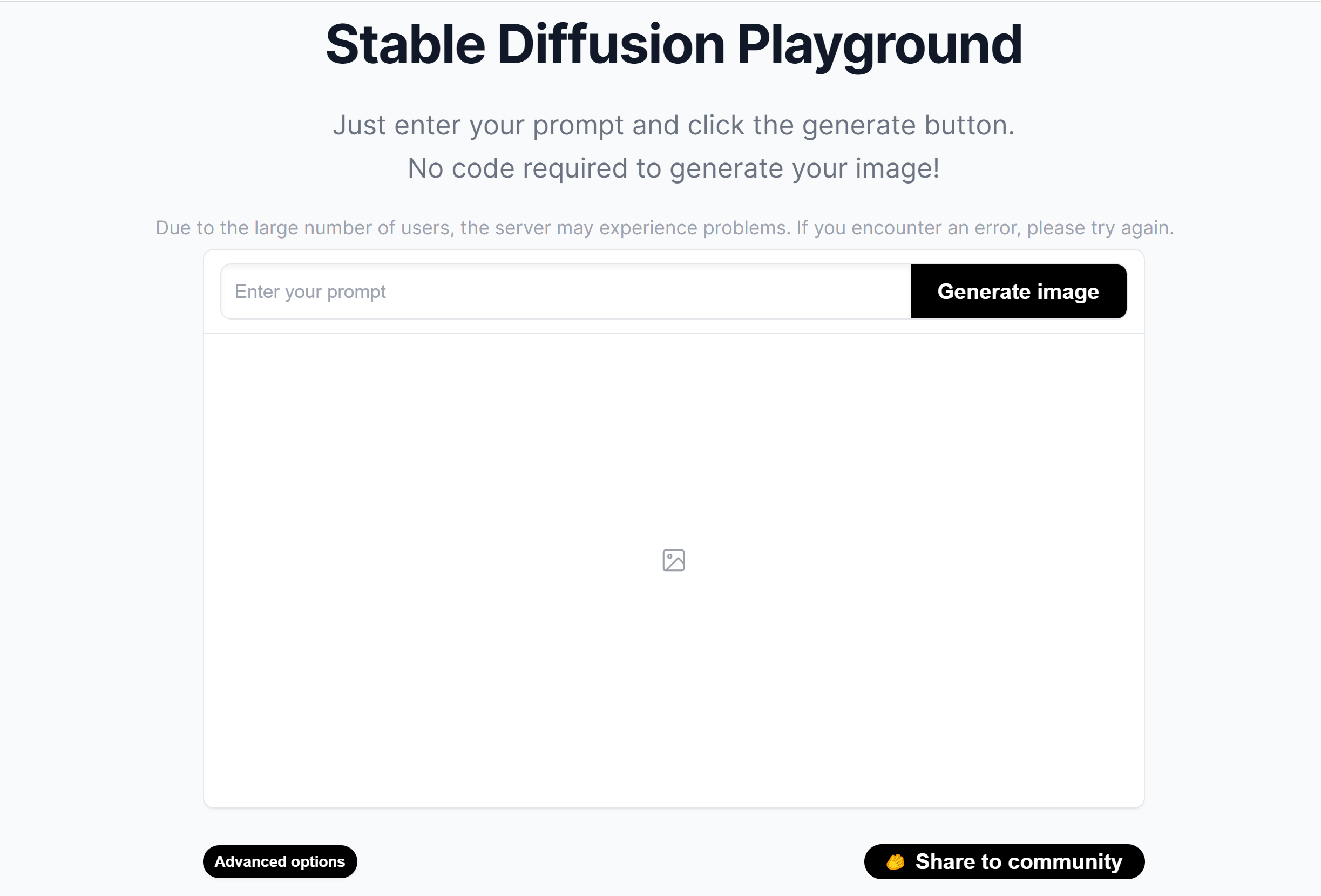

Now that we’ve covered the basics of Stable Diffusion and the importance of mastering prompts, it’s time to dive into the hands-on process of generating images. This section will guide you through inputting text prompts, customizing parameters, and saving and sharing your AI-generated images.

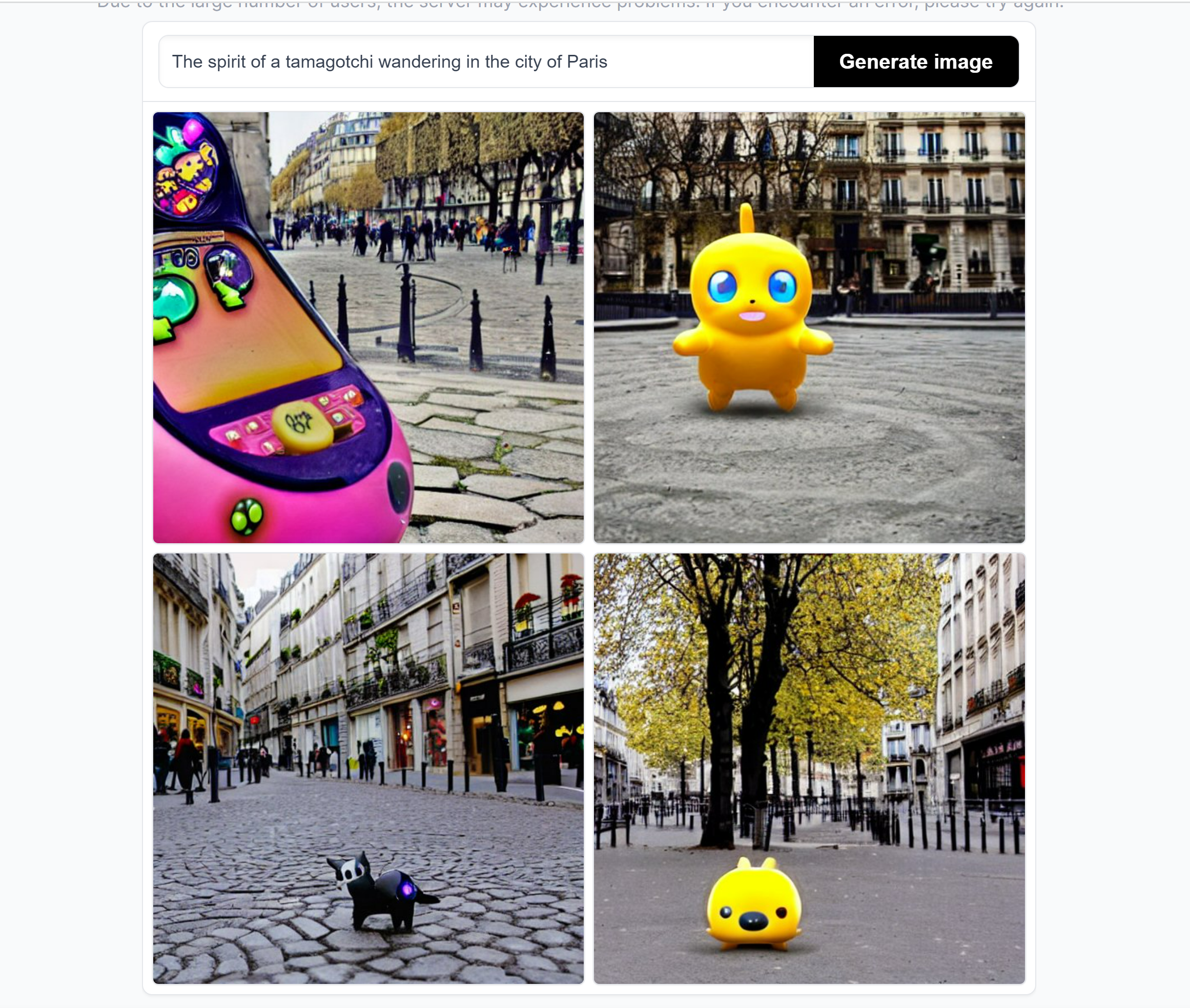

To generate images with Stable Diffusion, simply input a description for the desired image in the text prompt field. If there are any specific elements you want to exclude from the image, input them in the optional negative prompt field.

Once your prompts are set, you can adjust any available parameters to refine the image generation process further. When ready, click the “Generate” button to generate your image.

Upon generating your image, you can save it to your computer or share it with others. To save the image, right-click on it and select “Save Image As”.

If you are using Stable Diffusion locally, the generated images will be saved in a folder that can be accessed by clicking the “Folder” button. If you are using an online platform, follow the platform’s instructions for saving or sharing your image.

Inputting Text Prompts

Your descriptions must be clear and concise when inputting text prompts for Stable Diffusion. The AI model relies on these prompts to understand the context and desired outcome of the generated image.

Along with your main prompt, you can also input negative prompts to indicate what should not be included in the image, such as specific colors, shapes, or objects. By providing both main and negative prompts, you can effectively guide the AI model in generating an image that closely aligns with your vision.

Customizing Parameters

Customizing parameters in Stable Diffusion allows you to refine the image generation process by adjusting various settings, such as model, style, size, and number of images.

While some users prefer to stick with the default settings, customizing parameters can help you achieve more accurate and visually appealing results.

Experimenting with different parameter settings can provide valuable insights into the impact of these adjustments on the final generated image.

Saving and Sharing Generated Images

Once you’ve generated an image with Stable Diffusion, you may want to save it for future use or share it with others. To save an image, simply right-click on it and select “Save Image As”.

If you’re using Stable Diffusion locally, the generated images will be saved in a folder that can be accessed by clicking the “Folder” button. For those using online platforms, follow the platform’s specific instructions for saving or sharing the image.

Sharing your AI-generated art is a great way to showcase the incredible capabilities of Stable Diffusion and inspire others to explore this innovative technology.

Advanced Techniques for Stable Diffusion

For users looking to take their AI-generated art to the next level, Stable Diffusion offers several advanced techniques to enhance image generation further.

In this section, we will explore two such techniques: image-to-image generation and photo editing and enhancement. Incorporating these advanced techniques into your workflow can unlock greater creative potential and produce stunning images.

Image-to-image generation allows you to transform one image into another using Stable Diffusion, while photo editing and enhancement techniques, such as inpainting and outpainting, can improve the quality of generated images by fixing defects and adding additional elements.

These advanced techniques give users greater control over the outcome of their AI-generated images and can help achieve more refined and visually appealing results.

Image-to-Image Generation

Image-to-image generation is an advanced technique that enables you to transform one image into another using Stable Diffusion. This powerful process can be used to create entirely new images based on existing ones or to apply specific styles or effects to an input image.

For example, you could use image-to-image generation to turn a city skyline photograph into a futuristic cityscape or apply a Van Gogh-inspired style to a portrait.

The possibilities are virtually limitless with this versatile and innovative technique.

Photo Editing and Enhancement

Photo editing and enhancement techniques improve the quality of images generated by Stable Diffusion. These fix any defects or add additional elements not present in the original image.

Techniques such as inpainting can correct imperfections in the generated image, while outpainting allows you to incorporate extra elements into the image, enhancing its overall appearance.

These advanced photo editing and enhancement techniques can refine your AI-generated images and achieve even more stunning results.

Custom Models and Training

Custom models and training provide the perfect solution for users seeking even greater control and customization over their AI-generated images. By training custom models with Stable Diffusion, you can create specific styles or objects that may not be possible with the pre-trained base models.

Base models, such as 1.4, 1.5, 2.0, and 2.1, are pre-trained models that can be used for general applications, while custom models are specifically tailored and trained for a particular purpose or usage.

Custom models offer greater precision and potency than base models, allowing you to create images more closely aligned with your specific vision or requirements.

Base Models vs. Custom Models

While base Stable Diffusion models offer convenience and minimal setup, they may not be capable of handling more intricate tasks or generating images in specific styles or objects.

On the other hand, custom models require additional time and resources to be established and trained, making them more costly than base models.

However, the benefits of custom models often outweigh the drawbacks, as they provide greater precision and efficacy, allowing for more accurate and visually appealing images to be generated.

Ultimately, the choice between base and custom models will depend on your specific needs and the control you desire over the image generation process.

Training New Models with Stable Diffusion

Training new models with Stable Diffusion involves using tools like Dreambooth and embedding to improve the image generation capabilities of the AI model.

Dreambooth is a specialized form of fine-tuning that helps Stable Diffusion learn new concepts, while embedding is a technique used to represent data in a more meaningful manner, allowing for more precise predictions.

By training new models with these advanced techniques, you can create custom models that excel at generating images of specific styles or objects, providing even greater control over the final outcome of your AI-generated images.

Tips and Best Practices for Using Stable Diffusion

As you continue to explore the incredible capabilities of Stable Diffusion, it’s essential to remember some tips and best practices that can help you make the most of this powerful AI image generator.

In this section, we’ll share valuable insights on generating multiple variations of images and post-processing AI images to enhance their quality and overall appearance.

Generating multiple variations of images can increase the likelihood of obtaining usable results and help you test the effectiveness of your prompts.

By experimenting with different input data and parameters, you can create a diverse range of images, giving you more options and a better understanding of how different settings impact the final generated image.

Also, post-processing AI images can enhance quality by fixing defects and adding extra elements through face restoration and inpainting techniques.

Generating Multiple Variations

When testing prompts or adjusting your parameters, generating multiple variations of images is recommended to increase the chances of obtaining usable results.

Generating multiple variations can help you assess the effectiveness of your prompts and provide valuable insights into the impact of different settings on the final image.

By producing multiple image variations, you can also explore a wider range of creative possibilities and ensure a diverse selection of images.

Post-Processing AI Images

Post-processing AI images can be a valuable step in enhancing the quality and appearance of your generated images.

Techniques like face restoration and inpainting can fix defects in the generated image or add extra elements not present in the original image.

By incorporating these advanced photo editing and enhancement techniques into your workflow, you can further refine your AI-generated images and achieve even more visually appealing results.

Summary

In conclusion, Stable Diffusion offers a powerful and versatile solution for AI-generated art, enabling creators to produce stunning, high-quality images from simple text prompts.

By understanding the technology behind Stable Diffusion, mastering prompts, and exploring advanced techniques such as image-to-image generation and photo editing, you can unlock the full potential of this incredible AI image generator.

Whether you’re a seasoned digital artist or just starting to explore the world of AI-generated art, Stable Diffusion provides the tools and capabilities to bring your creative vision to life.

Frequently Asked Questions

How do I use Stable Diffusion?

To use Stable Diffusion, first sign up for a free account. Then you can create a prompt or select an existing one and set the parameters, such as image size and artistic style.

Once you have entered all the settings, click the generate button to create images using the AI-powered Stable Diffusion generator.

Is Stable Diffusion difficult to use?

Overall, Stable Diffusion is not difficult to use, especially when using easy-to-use user interfaces such as the web UI created by Automatc1111. With user-friendly tools and tutorials available, anyone can learn how to generate amazing images with Stable Diffusion quickly and easily.

How do you use Stable Diffusion image generation?

Using Stable Diffusion, you can generate images based on your prompt. All you need to do is find an existing image you would like to use, click on it to get the prompt string along with the model and seed number, then copy and paste it into the Stable Diffusion interface and press Generate to see the new images created.

Once you have the prompt string, you can generate various images with different colors, shapes, and textures. You can also adjust the parameters to create more.

What is Stable Diffusion?

Stable Diffusion is an AI-powered image generation technology that can create high-quality, realistic, and aesthetically pleasing images from text prompts. Utilizing advanced diffusion processes ensures a stable training experience.

How do I run Stable Diffusion on my computer?

To run Stable Diffusion on your computer, you will need to download the software from the official website and install it. Once installed, you should be able to begin using the program.